Leaving the baby shoes

When we think of machine learning people often have gigantic models on cloud servers in their mind. But with ongoing improvements more and more applications become possible at the edge and here efficiency is king.

Many methods have been suggested for making neural networks more efficient. Some of them are on the software side but one really interesting one targets the hardware.

Quantization: Usually training is done using 32 or 64 bit floating point calculations. It turned out that for inference having 8-bit integers or even less resolution results in almost no decrease of performance. Using 8 instead of 32-bit reduces the model size to a quarter and improves memory bandwidth.

On a general purpose CPU quantized neural networks won’t see a huge performance gain. They use dedicated hardware, the Floating Point Unit (FPU) to efficiently do 32 or 64 calculations.

But when you design a chip from scratch, being able to swap out expensive FPU’s with simple vector optimized 8-bit calculations can be a huge space and energy saver.

TensorFlow, Lite, and Micro

With the release of TensorFlow 1.9, Google turned a cross-compilation adventure into a quick pip install. This made experimenting with Machine Learning on the Raspberry Pi a lot more accessible for Makers. But for most use cases having a full TensorFlow Framework is just not necessary for production.

For this reason, the TensorFlow Team made TensorFlow Mobile which was already very lightweight and is supposed to do inference only. This was already a big step down in size, but it’s now being replaced by TensorFlow Lite which promises to be even smaller. And just recently a new experimental folder called Micro popped up in the TensorFlow GitHub repository. It targets systems like STM32 micro controllers.

This is just one of many signals that machine learning is finally maturing and ready for mainstream usage, not only in the cloud but especially on embedded systems. If we look at this trend it’s clear where we are going.

With the great success of quantized models, specialized hardware could drop those expensive floating point units and fit much more efficient 8bit vector operations into a given silicon area. These chips can even be further optimized to do common operations in machine learning models.

With current states, it’s possible to do a galaxy of tasks on cheap Single Board Computers with a price range from 5-100$.

The era of micro controllers

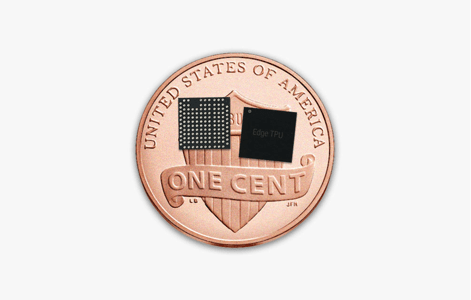

What’s even more impressive is that specialized hardware for machine learning is finally arriving. Take a look at Googles new Edge TPU and its Raspberry Boards. Smaller than a penny the Edge TPU makes some impressive claims.

Edge TPU enables users to concurrently execute multiple state-of-the-art AI models per frame, on a high-resolution video, at 30 frames per second, in a power-efficient manner. When designing Edge TPU we were hyper focused on optimizing for performance per dollar and performance per watt.

While there is no detailed info about price and performance this will definitely enable some interesting applications. High-speed image recognition and classification. Running realistic text to speech with Wavenet locally. Highly accurate speech recognition on-device, making the smartest Furby on the planet.

Edge TPU will run quantized models on TensorFlow lite which is available now as a developer preview. Under development but already usable. Now is the time to design your ideas and test your models to be the first on the market. The booming new area with lots of possible growth.

If you want to get some first hand feel for this clone our GitHub Repository and play around with audio recognition and speech commands.

Imagine what’s possible with this

Babelfish: Having Speech Recognition, Translation, and Text to Speech in a headset doing live translation

SmartCamera: Analyzing the camera in real time and making perfect settings for you

Industrial Processes: Inspecting all kinds of products for defects in high speed

See much more use in Embedded systems. Now is the right time to get started